|

|

|

Panelists:

Michael M. Cohen, University of California at Santa Cruz

Frederic Parke (moderator), Texas A&M University

Doug Sweetland, Pixar

Keith Waters, Digital Equipment Corporation

Facial animation is now attracting more attention than ever before in its 25 years as an identifiable area of computer graphics. Imaginative applications of animated graphical faces are found in sophisticated human-computer interfaces, interactive games, multimedia titles, VR telepresence experiences, and, as always, in a broad variety of production animations. Graphics technologies underlying facial animation now run the gamut from keyframing to image morphing, video tracking, geometric and physical modeling, and behavioral animation. Supporting technologies include speech synthesis and artificial intelligence. Whether the goal is to synthesize realistic faces or fantastic ones, representing the dynamic facial likeness of humans and other creatures is giving impetus to a diverse and rapidly growing body of cross-disciplinary research. The panel will present a historical perspective, assess the state of the art, and speculate on the exciting future of facial animation.

Human facial expression has been the subject of scientific investigation for more than one hundred years. Computer based facial expression modeling and animation is not a new endeavor [1]. Initial efforts in this area go back well over 25 years. Increasingly complex computer animated characters demand expressive, articulate faces. It is interesting that most of the currently employed techniques involve principles developed in the research community some years ago---in some cases, several decades ago.

The earliest work with computer based facial representation was done in the early 1970's. In 1971 Chernoff proposed the use of two-dimensional faces as a way to represent k-dimensional data. The first three-dimensional facial animation was created by Parke in 1972. In 1973 Gillenson developed in interactive system to assemble and edit line drawn facial images. And in 1974, Parke developed a parameterized three-dimensional facial model.

The early 1980's saw the development of the first physically based muscle-controlled face model by Platt and the development of techniques for facial caricatures by Brennan. In 1985, the short animated film ``Tony de Peltrie'' was a landmark for facial animation. In it for the first time computer facial expression and speech animation were a fundamental part of telling the story.

The late 1980's saw the development of a new muscle based model by Waters, the development of an abstract muscle action model by Magnenat-Thalmann and colleagues, and approaches to automatic speech synchronization by Lewis and by Hill.

The 1990's have seen increasing activity in the development of facial animation techniques and the use of computer facial animation as a key story telling component as illustrated in the recent film ``Toy Story.''

If past trends are a valid indicator of future developments, the next decade should be a very exciting time to be involved in computer facial animation. Driven by increases in computational power, the development of more effective modeling and animation techniques, and the insatiable need of animation production companies for ever more capable computer animated characters, the quantity and quality of facial animation will increase many fold.

At Pixar, facial animation is achieved by moving individual muscles on the face. This gives the animator incredibly acute control over the aesthetics and choreography of the face. With all of these controls to oversee, an animator has to have a complete sense of the result desired on screen.

The Pixar studio produces broad-based acting in feature animation; hence, the most important considerations are facial appearance and the meaning that the face conveys. Hopefully, before an animator begins working on the face, the character's body has been well animated and/or possess the proper attitude. A good strategy is to draw ``thumbnails,'' small sketches of the desired appearance of the face. Here an animator should think about the graphic design both in the small and in the large; from the relationship of one eyebrow to the other, to the interrelationship of all the facial features, to how the face relates to head position relative to the camera and perhaps even in the context of adjacent shots. The goal is to compose a graphic design with all its elements in place. None of the components are arbitrary and they all contribute towards the final effect.

A keen awareness of the character and its position at that point in the story---for example, the ability to answer questions such as "What has the character been through up to this point?", "What is this character thinking right now?", "What does this character want?"--- enables the facial animator to make sensible choices about the desired effect and how to compose the character to achieve the effect. The idea here is to make an intellectual decision about a character's behavior instead of trying to feel your way through it or drawing solely from a reading of the dialogue. Without the proper planning, the result can often seem hackneyed or inconsistent and the performance frequently goes lifeless once the line has been read. Mastering a character's thoughts, even if they are really quite simple or few, is a key to achieving a compelling performance that makes sense in the context of the entire film.

The overall goal in animating the face is to give the illusion that the poses and expressions are motivated by the character instead of being topically manipulated by the animator, despite the fact that the performance is premeditated and requires microscopic attention to detail. Making informed decisions on what the face needs to express and understanding natural facial composition are fundamental to the making of a believable facial performance.

It was hard enough to model and animate the faces of the toy characters in ``Toy Story''. An even more formidable task was to animate the face of Andy, the nice boy who owned the toys, along with the faces of the other members of Andy's family and Sid, the evil boy next door. This ranks prominently among the reasons for the brevity of the ``people scenes'' in the landmark film.

Indeed, a grand challenge in facial animation is the synthesis of artificial faces that look and act like your mother, or like some celebrity, or like any other real and perhaps familiar person. The solution to this challenge will involve not just computer graphics, but also other scientific disciplines such as psychology and artificial intelligence. In recent years, however, good progress has been made in realistic facial modeling for animation.

Creating artificial faces begins with a high fidelity geometric model suitable for animation that accurately captures the facial shape and appearance of a person. Traditionally, this job has been extremely laborious. Fortunately, animators are now able to digitize facial geometries and textures through the use of scanning range sensors, such as the one manufactured by Cyberware, Inc. Facial meshes can be adapted in a highly automated fashion by exploiting image analysis algorithms from computer vision.

A promising approach to realistic facial animation is to create facial models that take into account facial anatomy and biomechanics [2]. Progress has been made on developing facial models that are animated through the dynamic simulation of deformable facial tissues with embedded contractile muscles of facial expression rooted in a skull substructure with a hinged jaw. Facial control algorithms hide the numerous parameters and coordinate the muscle actions to produce meaningful dynamic expressions. When confronting the synthesis of realistic faces, it is also of paramount importance to adequately model auxiliary structures, such as the mouth, eyes, eyelids, teeth, lips, hair, ears, and the articulate neck, each a nontrivial task.

Sophisticated biomechanical models of this sort are obviously much more computationally expensive than traditional, purely geometric models. They can tax the abilities of even the most powerful graphics computers currently available. The big challenge is to make realistic facial models flawless a joy for animators to use. An intriguing avenue for future work is to develop brain and perception models that can imbue artificial faces with some level of intelligent behavior. Then perhaps computer animators can begin to employ realistic artificial faces the way film directors employ human faces.

Facial animation has progressed significantly over the past few years and a variety of algorithms and techniques now make it possible to create highly realistic looking characters: 3D scanners and photometric techniques are capable of creating highly detailed geometry of the face, algorithms are capable of emulating muscle and skin that approximates real facial expressions, and synthetic and real speech can be accurately synchronized to graphical faces.

Despite the technological advances, facial animation is humbled by some simple issues. As the realism of the face increases (making the synthetic face look more like a real person), we become much less forgiving of imperfections in the modeling and animation: If it looks like a person we expect it to behave like a person. This is due to the fact that we are extremely sensitive to reading small and very subtle facial characteristics in everyday life. Evidence suggests that our brains are even ``hard-wired'' to interpret facial images.

An alternative is to create characters that have non-human characteristics, such as dogs and cats. In this case we are desensitized to imperfections in the modeling and animation because we have no experience of talking dogs and cats. Taking this further, two dots and an upward curving line can convey as much information about the emotion of happiness as a complex 3D facial model whose facial muscles extend simulated skin at the corners of the mouth.

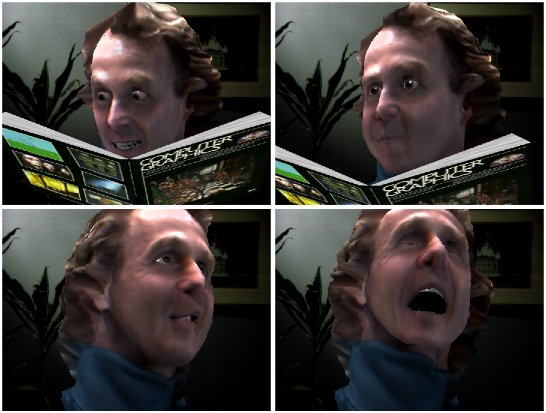

Understanding where some of these boundaries in facial animation exist helps us build new and exciting artifacts. We have constructed two scenarios to explore such novel forms of human computer interaction with real-time faces. The first is a talking face on the desktop [3]. The second is a Smart Kiosk, where the human-computer interaction is governed by the visual sensing of users in the environment [4].

At the UC-Santa Cruz Perceptual Science Laboratory we have developed a high quality visual speech synthesizer - a computer-animated talking face - incorporating coarticulation (the interaction between nearby speech segments). Our visual synthesis works in tandem with acoustic text-to-speech synthesis which supplies the acoustic speech as well as segmental and other linguistic information for facial control. Goals for this technology include gaining an understanding of the visual information that is used in speechreading, how this information is combined with auditory information, and its use as an improved channel for man/machine communication.

Our software [5] is a descendant of that first made by Fred Parke, incorporating additional and modified control parameters, a tongue, and a new visual speech synthesis control strategy. An important element of our visual speech synthesis software has been the development of an algorithm for articulator control which takes coarticulation into account. Our approach to the synthesis of coarticulated speech is based on an articulatory gesture model described by Lofqvist in 1990. In this model, a speech segment has dominance over the vocal articulators which increases and then decreases over time during articulation. Adjacent segments have overlapping dominance functions which leads to a blending over time of the articulatory commands related to these segments. We have instantiated this model in our synthesis algorithm using negative exponential functions for dominance. Given that articulation of a segment is implemented by many articulators, there is a separate dominance function for each control parameter. Each phoneme is specified in terms of the speech control parameter target values and the dominance function characteristics of magnitude, time offset, and leading attack and trailing decay rates.

One of the goals of our synthesis is to have a talking head that articulates as clearly (or even more clearly) than human talkers. An essential component of the development process is an evaluation of the synthesis quality. This analysis of the facial synthesis may be seen as a validation process. By validation, we mean a measure of the degree to which our synthetic faces mimic the behavior of real faces. Confusion matrices and standard tests of intelligibility are being utilized to assess the quality of the facial synthesis relative to the natural face. These results also highlight those characteristics of the talking face that could be made more informative. In a typical study [6], we have presented silently for identification short English words (e.g. sing, bin, dung, dip, seethe) produced either by a natural speaker or our synthetic talker randomly intermixed. By comparing the overall proportion correct and analyzing the perceptual confusions, we can determine how closely the synthetic visual speech matches the natural visual speech. Because of the difficulty of speechreading, we expect confusions for both the natural and synthetic visual speech. The questions to be answered are: what is the amount of confusions and how similar are the patterns of confusion for the two talkers.

Recently we have ported our software from our original SGI IRIS-GL environment to OpenGL under Windows NT. Using an inexpensive graphics board we can achieve real-time performance at 30+ frames/second on a 200 MHz Pentium Pro. We have also combined our visual-auditory synthesis software with automatic speech recognition (OGI Toolkit) in structured dialog, and will demonstrate a sample application. We will also discuss some of our future plans for visual-auditory speech with emotion and the use of conversational backchanneling.

Creating character animation in a production environment presents many challenges to the animator: The wide variety of projects presents diverse needs and requirements. PDI has concentrated on character animation for years, working on many different types of characters. With each new character, the character's design, its animation style, and the length of the project are all factors in developing the facial animation system. A facial animation system for a cartoon character who has exaggerated, extreme facial expressions, may not be appropriate for a character who must display very subtle, human-like expressions. Two recent projects at PDI demonstrate two very different facial animation solutions.

The ``Simpsons'' project involved animating a character with distinct, recognizable, exaggerated facial expressions. In addition, with limited lead time, we had to develop a solution in a relatively short time frame. We created a shape interpolation-based system with layered high-level deformation controls. This allowed animators to specify exaggerated expressions with relative ease. They could then use lower-level controls to refine the animation of individual facial features.

Our upcoming ``ANTZ'' film project, on the other hand, requires a wide variety of detailed, human-based expressions. The lead time for the film project is much greater, allowing the development of a more elaborate, robust facial animation system. We created an anatomically-based facial muscle system for ANTZ. Higher-level expression libraries are used to block in the main expressions. Lower-level muscle controls are then animated as the motion is refined.

Each system achieved the desired results for their particular project. The common element was to provide a layered approach for the animators. High-level controls block out overall expressions and timing, allowing the animator to easily refine overall timing without having to adjust many controls. Mid-range controls are used to start offsetting timing of individual features and to begin including unique movements to the expressions. Finally, low-level controls allow the animator to introduce a final level of detail to the animation. Layered controls allow facial animators to work efficiently, no matter what type of underlying animation system is being used.

[1] F.I. Parke and K. Waters, ``Computer Facial Animation,'' A K Peters, 1996.

[2] Y. Lee, D. Terzopoulos, and K. Waters, ``Realistic modeling for facial animation,'' Proc. ACM SIGGRAPH 95 Conf., pp. 55-62, 1995.

[3] K. Waters and T. Levergood, ``DECface: A System for Synthetic Face Applications,'' Multimedia Tools and Applications, 1, pp. 349-366, 1995.

[4] K. Waters, J. Rehg, M. Loughlin, S. B. Kang, and D. Terzopoulos, ``Visual Sensing of Humans for Active Public Interfaces,'' Digital Cambridge Research Lab TR 95/6, March 1996.

[5] M. M. Cohen and D. W. Massaro. ``Modeling coarticulation in synthetic visual speech,'' In N. M. Thalmann & D. Thalmann (Eds.) Models and Techniques in Computer Animation. Tokyo: Springer-Verlag, 139-156, 1993.

[6] M. M. Cohen, R. L. Walker, and D. W. Massaro, ``Perception of Synthetic Visual Speech'', Speechreading by Man and Machine: Models, Systems and Applications, NATO Advanced Study Institute 940584, (Aug 28-Sep 8, 1995, Chateau de Bonas, France).